Fighting a DDoS Attack: A post-mortem

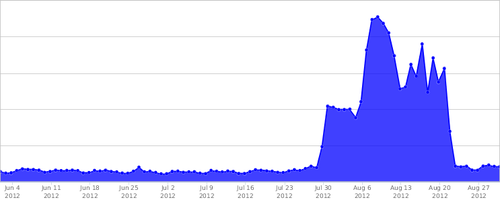

We previously discussed our experience with downtime due to a DDoS attack, but since then it got worse, and we got better at fighting it.

The Attack

At the end of July, we starting getting hit by a distributed denial of service (DDoS) attack. The attackers are going after domains with a certain term in them. For example, pretend the term was “bird” and they were attacking birdwatchers.net and houses-for-birds.biz. The attack wasn’t targeted directly at Harmony or our clients, but our clients were instead part of a larger, broader attack.

It was a true DDoS, coming from more than a million unique IP addresses.

The traffic was a mix of legitimate-looking page requests, and obvious bad requests. The bad requests mostly took the form of HTTP POST requests where they shouldn’t be posting to, and GET requests with a strange query string.

Mitigation Strategy 1: Short-circuit bad requests

Harmony is built to scale up well; we regularly have sites featured on Reddit or Hacker News and it doesn’t stress our infrastructure at all. However, the attack was using cache-busting strategies, causing them to hit a slower code path in our app instead of our cache layer.

Our first response was to short-circuit invalid POSTs. We changed our routing layer to recognize the few types of POST that we actually support, and turned all other requests into HTTP errors.

This worked for a while and got Harmony back up, but the attack ramped up in the following weeks.

Mitigation Strategy 2: Moar1 Hardware!

To deal with increased load, we added hardware. First it was simply beefing up our existing boxes, and that kept us ahead of the attack for a bit longer.

On August 17, we turned to a new load balancer to give us more flexibility. We did encounter some problems during the installation, and our maintenance window ballooned to over an hour. The downtime was extremely frustrating, but a necessary addition to give us more control over how requests are handled.

Mitigation Strategy 3: Request Filtering

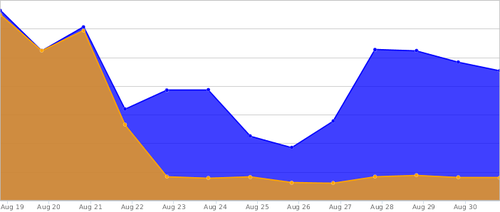

Blue are requests received by the load balancer and orange are requests that make it to the app servers.

With our new load balancer we can now identify potential attackers, IP spoofing, and illegitimate requests, and block them before they get near our app servers. We’ve been really happy with the results, and we have a lot more room to respond to future attacks. Though we continue to see spikes on the load balancer, our application servers are back to their normal level of traffic.

Other downtime

On August 28, we experienced 12 minutes of downtime due to an upstream DDoS attack related to DNS. This attack wasn’t directed at us, but we felt its effects.

In addition, we’ve had blips of downtime due to maintenance and load testing, but not due to attacks. We are working on our infrastructure to eliminate downtime that we cause, and continuing to stay ahead of attacks.

We’ve been using our status page as a way to be transparent about our downtime. We want to be open about our failures, as we work to eliminate them.

Moving Forward

We really hate even a minute of down time and we know our customers hate it as much as we do. We’ve learned a lot over this last month about what bottlenecks Harmony faces and we are working hard to smooth them out. We have a number of infrastructure improvements planned for the coming months. Expect to hear more about them soon.

1 I gave a talk on fighting this attack at BarCampGR last week, and the first question was, “Why did you spell ‘moar’ that way?”

Can’t spoof IPs with TCP/IP, only UDP – which shouldn’t make it to your app servers anyhow.

Talk to Radware as they have a solution to generate signatures on the fly within seconds to mitigate such attacks.